VigyaanCD at

http://www.vigyaancd.org/

has a nice collection of bioinformatics software and is worth downloading. It will boot directly from the CD and needs very little linux expertise as the X-windows system is almost like windows.

Welcome to my website . This website is built up to address queries from students and upcoming professionals in the field of life sciences. Although the website projects itself as a bioinformatics portal and most of the discussion revolves around bioinfo and biotech we would like to refer as life sciences.Plz Share Ur Comments about this Blog, So that it can b customized as per ur needs...Thank u

| toolbar powered by Conduit |

Your own Bioinformatics workstation using VigyaanCD

R is a language and environment for statistical computing and graphics. It is a GNU project which is similar to the S language and environment which was developed at Bell Laboratories (formerly AT&T, now Lucent Technologies) by John Chambers and colleagues. R can be considered as a different implementation of S. There are some important differences, but much code written for S runs unaltered under R.

R provides a wide variety of statistical (linear and nonlinear modelling, classical statistical tests, time-series analysis, classification, clustering, ...) and graphical techniques, and is highly extensible. The S language is often the vehicle of choice for research in statistical methodology, and R provides an Open Source route to participation in that activity.

All images on this site are Copyright (C) the R Foundation and may be reproduced for any purpose provided they are credited to the R statistical software using an attribution like "(C) R Foundation, from http://www.r-project.org".

One of R's strengths is the ease with which well-designed publication-quality plots can be produced, including mathematical symbols and formulae where needed. Great care has been taken over the defaults for the minor design choices in graphics, but the user retains full control.

R is an integrated suite of software facilities for data manipulation, calculation and graphical display. It includes

The term "environment" is intended to characterize it as a fully planned and coherent system, rather than an incremental accretion of very specific and inflexible tools, as is frequently the case with other data analysis software.

R, like S, is designed around a true computer language, and it allows users to add additional functionality by defining new functions. Much of the system is itself written in the R dialect of S, which makes it easy for users to follow the algorithmic choices made. For computationally-intensive tasks, C, C++ and Fortran code can be linked and called at run time. Advanced users can write C code to manipulate R objects directly.

Many users think of R as a statistics system. We prefer to think of it of an environment within which statistical techniques are implemented. R can be extended (easily) via packages. There are about eight packages supplied with the R distribution and many more are available through the CRAN family of Internet sites covering a very wide range of modern statistics.

R has its own LaTeX-like documentation format, which is used to supply comprehensive documentation, both on-line in a number of formats and in hardcopy.

R (programming language)

Well all I can say that the idea is worth to try but the intentions are more important. Now a days a lot of self promotional open movements are playing around, you can not stake on every and any effort. Suddenly there is flood of "Open" movements, people are really confused what's going on. To me it looks like a rat race to win Novel Prize for "Open" movementWe were admittedly disappointed by the lack of openness in the development of the Cloud Manifesto. What we heard was that there was no desire to discuss, much less implement, enhancements to the document despite the fact that we have learned through direct experience. Very recently we were privately shown a copy of the document, warned that it was a secret, and told that it must be signed "as is," without modifications or additional input. It appears to us that one company, or just a few companies, would prefer to control the evolution of cloud computing, as opposed to reaching a consensus across key stakeholders (including cloud users) through an “open” process. An open Manifesto emerging from a closed process is at least mildly ironic.

Moving Towards Open Cloud?

Lowering Pharma firewalls: Just for Bioinformatics or Chemoinformatics also

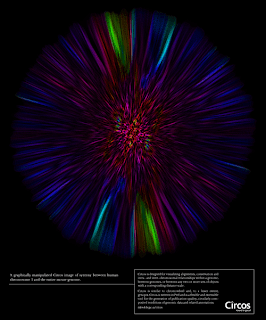

CIRCOS - VISUALIZING THE GENOME, AMONG OTHER THINGS

Melanie Swan has written an interesting piece about Expanding notion of Computing on her blog Broader Perspective. In response to the question how computing definitions and realms are evolving, she writes

the traditional linear Von Neumann model is being extended with new materials, 3D architectures, molecular electronics and solar transistors. Novel computing models are being investigated such as quantum computing, parallel architectures, cloud computing, liquid computing and the cell broadband architecture like that used in the IBM Roadrunner supercomputer. Biological computing models and biology as a substrate are also under exploration with 3D DNA nanotechnology, DNA computing, biosensors, synthetic biology, cellular colonies and bacterial intelligence, and the discovery of novel computing paradigms existing in biology such as the topological equations by which ciliate DNA is encrypted.

Evloving computing definitions and realms

[T]he overwhelming majority of genes bound by a particular transcription factor (TF) are not affected when that factor is knocked out. Here, we show that this surprising result can be partially explained by considering the broader cellular context in which TFs operate. Factors whose functions are not backed up by redundant paralogs show a fourfold increase in the agreement between their bound targets and the expression levels of those targets.

It's extremely rare in nature that a cell would lose both a master gene and its backup, so for the most part cells are very robust machines. We now have reason to think of cells as robust computational devices, employing redundancy in the same way that enables large computing systems, such as Amazon, to keep operating despite the fact that servers routinely fail

Backup and fault tolerance in systems biology: Striking similarity with Cloud computing

Katherine Mejia asked this question on twitter, well just now I want to keep it short,

Visual comparison of SBML, BioPAX, CellML and PSI

Visual comparison of SBML, BioPAX, CellML and PSIBioPAX or SBML?

Multidisciplinarity is the act of joining together two or more disciplines without integration. Each discipline yields discipline specific results while any integration would be left to a third party observer. An example of multidisciplinarity would be a panel presentation on the many facets of the AIDS pandemic (medicine, politics, epidemiology) in which each section is given as a stand-alone presentation.

A multidisciplinary community or project is made up of people from different disciplines and professions who are engaged in working together as equal stakeholders in addressing a common challenge. The key question is how well can the challenge be decomposed into nearly separable subparts, and then addressed via the distributed knowledge in the community or project team. The lack of shared vocabulary between people and communication overhead is an additional challenge in these communities and projects. However, if similar challenges of a particular type need to be repeatedly addressed, and each challenge can be properly decomposed, a multidisciplinary community can be exceptionally efficient and effective. A multidisciplinary person is a person with degrees from two or more academic disciplines, so one person can take the place of two or more people in a multidisciplinary community or project team. Over time, multidisciplinary work does not typically lead to an increase nor a decrease in the number of academic disciplines.

"Interdisciplinarity" in referring to an approach to organizing intellectual inquiry is an evolving field, and stable, consensus definitions are not yet established for some subordinate or closely related fields.

An interdisciplinary community or project is made up of people from multiple disciplines and professions who are engaged in creating and applying new knowledge as they work together as equal stakeholders in addressing a common challenge. The key question is what new knowledge (of an academic discipline nature), which is outside the existing disciplines, is required to address the challenge. Aspects of the challenge cannot be addressed easily with existing distributed knowledge, and new knowledge becomes a primary subgoal of addressing the common challenge. The nature of the challenge, either its scale or complexity, requires that many people have interactional expertise to improve their efficiency working across multiple disciplines as well as within the new interdisciplinary area. An interdisciplinarary person is a person with degrees from one or more academic disciplines with additional interactional expertise in one or more additional academic disciplines, and new knowledge that is claimed by more than one discipline. Over time, interdisciplinary work can lead to an increase or a decrease in the number of academic disciplines.

Bioinformatics and Systems Biology: Multidisciplinary scientists versus Interdisciplinary scientist

The next Level of SBML will be modular, in the sense of having a defined core set of features and optional packages adding features on top of the core. This modular approach means that models can declare which feature-sets they use, and likewise, software tools can declare which packages they support.Apart from the SBML Level 3 Core community is also reviewing several other proposals for optional extension packages such as Layout, Rendering, Multistate multicomponent species, Hierarchical Model Composition, Geometry, Qualitative Models and many more. Nearly all Level 3 activities are currently in the proposal stage.

SBML Level 3 is arriving

Why have modeling approaches yet to be embraced in the mainstream of biology, in the way that they have been in other fields such as physics, chemistry and engineering? What would the ideal biological modeling platform look like? How could the connectivity of the internet be leveraged to play a central role in tackling the enormous challenge of biological complexity?

A Meditation on Biological Modeling

The translation of a gene network model from a genetic sequence is very similar to the compilation of the source code of a computer program into an object code that can be executed by a microprocessor (Figure 1). The first step consists in breaking down the DNA sequence into a series of genetic parts by a program called the lexer or scanner. Since the sequence of a part may be contained in the sequence of another part, the lexer is capable of backtracking to generate all the possible interpretations of the input DNA sequences as a series of parts. All possible combinations of parts generated by the lexer are sent to a second program called the parser to analyze if they are structurally consistent with the language syntax. The structure of a valid series of parts is represented by a parse tree (Figure 2). The semantic evaluation takes advantage of the parse tree to translate the DNA sequence into a different representation such as a chemical reaction network. The translation process requires attributes and semantic actions. Attributes are properties of individual genetic parts or combinations of parts. Semantic actions are associated with the grammar production rules. They specify how attributes are computed. Specifically, the translation process relies on the semantic actions associated with parse tree nodes to synthesize the attributes of the construct from the attributes of its child nodes, or to inherit the attributes from its parental node.

Figure 2.

Figure 2.What synthetic biology can learn from programming languages

BIOINFORMATICS. All Rights Reserved.